Introduction

Imagine this: a city where police officers already know where the next crime might occur or who might commit it before it happens. It sounds like a scene out of a futuristic movie. But this isn’t fiction. Across the world, AI Crime Prediction Tools are already being used in real police departments to forecast potential crimes before they take place. The concept sounds revolutionary—but should we be excited or alarmed?

- Introduction

- The Technology Behind AI Crime Prediction Tools

- Why Law Enforcement Is Turning to AI

- Success Stories—Where It's Worked

- The Dark Side—Where It Went Wrong

- Ethical Dilemmas in AI Crime Prediction

- The Question of Bias—Built Into the Code?

- Legal Implications—Is AI Evidence Even Admissible?

- Human Rights vs. Algorithmic Policing

- Transparency and Accountability—Who's Watching the Watchers?

- Alternatives to Predictive Policing—What Else Can We Do?

- Public Perception—Fear, Trust, and the Future of Safety

- The Global View—How Other Countries Use AI in Policing

- The Road Ahead—Regulations, Reforms, and Responsibilities

- Conclusion

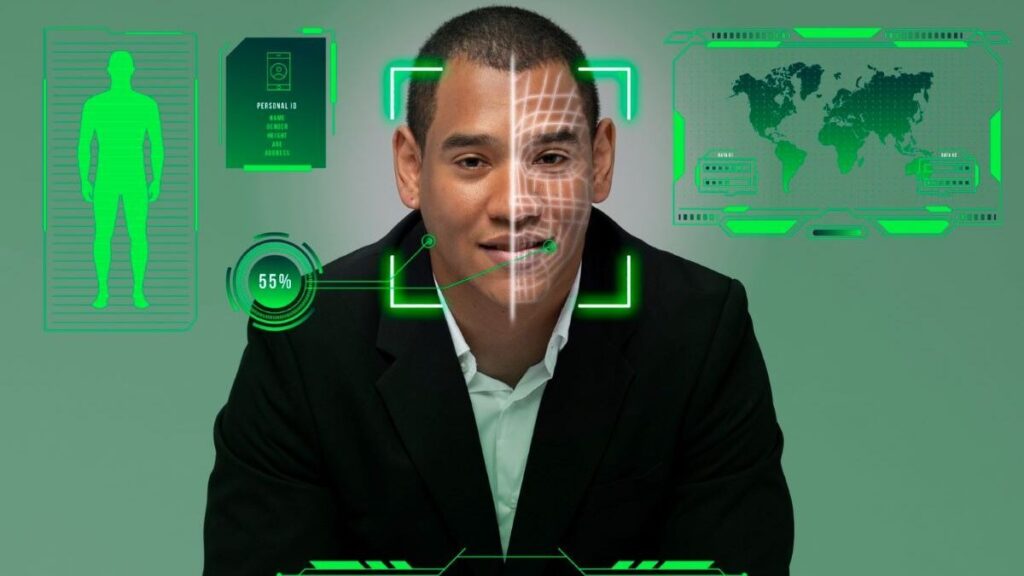

AI Crime Prediction Tools are part of what’s known as “predictive policing,” a strategy that uses algorithms, crime statistics, and behavioral data to identify trends and make predictions. These tools promise smarter, more efficient policing. They claim to save time, allocate resources wisely, and even prevent crimes before they happen. With increasing support from governments and tech firms, they’re gaining traction quickly.

However, with great power comes great responsibility—and controversy. Critics argue that these tools often reflect and amplify existing biases in law enforcement. If the historical crime data used to train AI systems is already flawed or racially biased, then predictions made by these tools can end up unfairly targeting certain groups. Instead of making policing fairer, AI Crime Prediction Tools might end up reinforcing the very inequalities they were meant to solve.

What makes this debate even more urgent is the rapid pace of implementation. From the United States to the UK, from China to India, law enforcement agencies are deploying these tools without fully understanding their consequences. The question is no longer if we should use them, but how and to what extent.

In this article, we’re diving deep into the world of AI Crime Prediction Tools—how they work, their real-life applications, the ethical concerns they raise, and whether we should be worried. Buckle up; it’s going to be a wild ride through data, justice, and the future of public safety.

The Technology Behind AI Crime Prediction Tools

Let’s demystify what’s under the hood of AI Crime Prediction Tools. At their core, these tools are built on algorithms—mathematical formulas that learn patterns from historical crime data. They’re like highly analytical detectives, except they don’t sleep, don’t forget, and don’t second-guess themselves.

So, how exactly do they work? First, massive datasets are fed into the system. This data can include past crime reports, arrest records, time and location details, gang affiliations, and even social media activity. Machine learning algorithms then process this data, identify patterns, and start predicting future crimes based on similarities.

Some tools, like PredPol (Predictive Policing), focus on locations. They predict where crimes are likely to occur and suggest increased patrols in those areas. Others, like HunchLab or Palantir, go a step further by flagging individuals who might be involved in future criminal activities based on their behavior or associations. Scary? Maybe. Powerful? Absolutely.

These systems often rely on two major types of prediction: place-based and person-based. Place-based tools look at high-crime zones and try to forecast future hotspots. Person-based tools are more controversial—they use social ties, records, and interactions to flag individuals as high-risk.

It’s worth noting that not all AI Crime Prediction Tools are created equal. Some use advanced neural networks capable of deep learning, while others operate on simpler statistical models. The more sophisticated the tool, the more nuanced (and sometimes mysterious) its predictions become.

But here’s the catch: the tools are only as good as the data they’re trained on. Predictions will also be skewed, lacking, or erroneous if the past evidence is. This brings up serious concerns about fairness, accuracy, and accountability. After all, these tools don’t just recommend extra patrols—they can influence real-world decisions like arrests, surveillance, and even sentencing.

In short, while AI Crime Prediction Tools have the potential to revolutionize policing, they also open a Pandora’s box of ethical and legal questions. Understanding how they work is the first step in holding them accountable.

Why Law Enforcement Is Turning to AI

Law enforcement agencies worldwide are under pressure to do more with less. Shrinking budgets, rising crime rates, and increasing scrutiny have forced departments to look for innovative solutions, and that’s where AI Crime Prediction Tools come into play.

One of the biggest selling points of these tools is efficiency. Traditional policing methods can be time-consuming and resource-heavy. Officers spend hours combing through data manually, trying to spot patterns. But AI Crime Prediction Tools can analyze years of data in seconds, providing actionable insights faster than any human could.

Then, there’s the appeal of being proactive instead of reactive. Instead of waiting for a crime to happen, predictive policing aims to stop it before it starts. This shift could, in theory, save lives, protect property, and reduce the burden on the criminal justice system.

Another benefit often highlighted is improved resource allocation. AI can help police departments decide where to send their limited workforce. For example, if the system predicts a surge in burglaries in a particular neighborhood, officers can be deployed there before the spike occurs. This kind of data-driven strategy is especially useful for understaffed departments.

There’s also the influence of public and political pressure. Politicians want to come across as strong on crime, while communities wish for safer streets. Investing in AI Crime Prediction Tools gives leaders a way to show they’re embracing innovation and tackling issues with the latest tech.

However, the rush to adopt these tools often skips over essential discussions about ethics, privacy, and oversight. While the advantages are clear on paper, the reality is far more complex. The effectiveness of these tools depends heavily on how they are implemented and monitored.

So yes, law enforcement is turning to AI—but whether this trend leads to safer communities or more surveillance depends on the choices we make next.

Success Stories—Where It’s Worked

Despite the controversies, there are places where AI Crime Prediction Tools have shown promising results. Several police departments and municipalities have reported measurable success after adopting these technologies, though it’s essential to examine these claims with a critical eye.

Take Los Angeles, for example. The LAPD began experimenting with PredPol nearly a decade ago. The system focused on predicting where property crimes like burglary and auto theft were likely to occur. Officers were then sent to patrol these areas proactively. According to early reports, neighborhoods saw reductions in crime, and police praised the system for helping them work more strategically.

In Chicago, the police department created the Strategic Subject List (SSL), a person-based predictive tool. This list flagged individuals based on their past behavior, gang affiliations, and likelihood of being involved in a shooting, either as a victim or perpetrator. The goal was not just to prevent crime but to intervene early, potentially offering social services or community support.

The UK has also dipped its toes into predictive policing. In Kent, authorities used AI to identify “predictive hot spots” based on previous crime patterns. The tool helped police focus on specific neighborhoods and reportedly led to increased arrest rates for certain offenses.

These success stories have helped build the case for broader adoption. Supporters argue that AI Crime Prediction Tools free up officers to focus on serious crimes, reduce the chances of random patrolling, and help identify threats that might otherwise be missed.

But it’s important to remember that correlation doesn’t equal causation. Just because crime drops after using AI doesn’t mean the tool was solely responsible. Other factors—like community initiatives, economic changes, or seasonal trends—could also play a role.

Still, these case studies show that when used thoughtfully and transparently, AI Crime Prediction Tools can be part of a smarter, more responsive public safety strategy. The key lies in how they are used, not just that they are used.

The Dark Side—Where It Went Wrong

Of course, not all experiences with AI Crime Prediction Tools have been positive. Some have proven to be blatantly unsettling. As these tools have become more widespread, so too have the stories of misuse, bias, and unintended consequences.

One of the most infamous cases involves PredPol, the same tool praised in Los Angeles. A study by the AI Now Institute revealed that PredPol disproportionately targeted low-income and minority neighborhoods. Rather than predicting crime accurately, the tool essentially reinforced historical patterns of over-policing. If a neighborhood was heavily patrolled in the past, it became more likely to be flagged again, creating a vicious cycle of surveillance.

In Chicago, the Strategic Subject List was quietly retired after public backlash. Civil rights groups and journalists criticized the lack of transparency surrounding the list. People didn’t know they were on it, and there was no clear way to appeal or contest their inclusion. Worse, there was little evidence that the list helped reduce crime. Instead, it created fear and mistrust in communities already struggling with police relations.

Another troubling example comes from New Orleans, where Palantir Technologies worked with the police department to secretly implement a predictive system without informing the public. Once the partnership was exposed, it raised serious concerns about government transparency and the privatization of law enforcement data.

The dark side of these tools often boils down to the same core issue: biased data. And when decisions about people’s lives are based on faulty predictions, the consequences can be devastating. Imagine being arrested or harassed not for something you did but for something an algorithm thinks you might do.

So, while AI Crime Prediction Tools can be powerful, they’re also dangerous when misused. Without strict oversight, ethical standards, and community involvement, these tools risk becoming instruments of injustice rather than tools of safety.

Ethical Dilemmas in AI Crime Prediction

Let’s face it—predicting someone’s likelihood to commit a crime feels like something ripped out of a dystopian novel. And yet, here we are. The ethical dilemma at the heart of AI Crime Prediction Tools is whether it’s fair—or even moral—to treat someone differently based on a probability, not an action. Think about it. If a teenager living in a high-crime area is flagged by an algorithm as “high risk,” does that mean they should be monitored more closely than their peers? That kind of treatment can affect not just law enforcement behavior but also how teachers, employers, and even neighbors view them. That’s a self-fulfilling prophecy, not merely a prediction.

One major issue here is the concept of “algorithmic determinism.” It assumes people are defined by their environment, past associations, or statistical risk factors. But what about free will? What about personal growth or second chances? Once a system labels someone as “high risk,” it can be hard to shake off that tag—even if they’ve never committed a crime.

It doesn’t stop there. These tools often use historical crime data to make future predictions. Sounds logical. However, what if such information is already skewed? If certain neighborhoods have been over-policed in the past, their higher arrest rates feed into the AI, making them appear more dangerous. The system isn’t learning who will commit a crime—it’s learning who got caught, which often correlates with racial and socioeconomic biases.

That brings us to another big ethical concern: transparency. Most AI Crime Prediction Tools are “black boxes.” They churn out predictions, but we don’t always know how they got there. If someone gets arrested or surveilled based on AI output, shouldn’t we be able to question the logic? Regretfully, private systems frequently use trade secrets to conceal their algorithms. Ethics isn’t just about what we can do with AI—it’s about what we should do. And when it comes to policing, the stakes are just too high to ignore.

The Question of Bias—Built Into the Code?

You’ve probably heard the phrase “garbage in, garbage out.” Nowhere is that more true than with AI Crime Prediction Tools. To create predictions, these algorithms mostly rely on past crime data, but what happens if that data is biased? Simple: the AI learns the same biases and applies them at scale.

Let’s break it down. Say a city has a long history of over-policing minority neighborhoods. That means the arrest records, incident reports, and criminal charges from those areas are far more numerous, not necessarily because there’s more crime, but because there’s more policing. When that data is fed into an AI system, it begins to associate certain zip codes or demographics with criminal behavior. It’s not predicting future crimes—it’s echoing past injustices.

There’s also the issue of feedback loops. Once an area is flagged as “high risk,” police are more likely to patrol it. More patrols mean more arrests, which reinforces the idea that the area is dangerous, which leads to more patrols. The cycle keeps going. It’s like a snowball of suspicion, and it gets bigger and more unjust with each pass.

An alarming example of this is the use of facial recognition in crime prediction. Studies have shown these tools misidentify people of color at a significantly higher rate than white individuals. So when a face-matching system is used in tandem with crime prediction software, you end up with a double-whammy of technological bias.

Of course, tech companies argue that their systems are “objective” because they’re based on numbers, not opinions. But here’s the thing—numbers aren’t neutral when they’re built on biased foundations. That’s like baking a cake with spoiled eggs and expecting it to taste fine just because you followed the recipe.

So yes, bias can be built into the code. And if we’re not careful, AI Crime Prediction Tools won’t reduce inequality—they’ll automate it.

Legal Implications—Is AI Evidence Even Admissible?

Imagine being in court and finding out the main reason you’re being charged is because a machine said you were likely to commit a crime. Sounds outrageous, right? But this isn’t some sci-fi courtroom drama—it’s a growing concern in the legal world. AI Crime Prediction Tools are starting to influence real legal decisions, and that raises a mountain of legal questions.

For starters, can an algorithm’s prediction be considered valid evidence? In some jurisdictions, courts have allowed predictive tools to guide bail and sentencing decisions. They look at factors like prior offenses, age, and location to assess “risk.” But when a judge relies heavily on AI, it can create serious issues around fairness and due process.

One big legal hurdle is transparency. If the defense can’t understand how the prediction was made—because the algorithm is proprietary or too complex—how can they argue against it? It’s like being accused by a ghost. You can’t cross-examine an algorithm. You can’t ask it why it decided you were dangerous. And that’s a huge problem in any legal system built on the principle of “innocent until proven guilty.”

Another issue is consistency. Different AI tools might analyze the same data and produce wildly different results. So, whose prediction gets to carry the most weight? And how do we know which tool is the most accurate or fair?

There’s also the matter of liability. If someone is wrongfully arrested or sentenced based on an AI prediction, who’s responsible? The developers? The police department? The judge who relied on the system? Legal scholars are still debating these questions, and right now, there are more questions than answers.

In the U.S., some lawmakers are beginning to call for stricter regulations or even bans on using predictive AI in courtrooms. In the EU, the GDPR includes “right to explanation” clauses that give individuals the right to understand how automated decisions are made about them. These kinds of protections are crucial if we’re going to let AI Crime Prediction Tools play any role in legal decision-making.

Until we sort these legal gray areas out, relying on AI in criminal justice systems feels like skating on thin legal ice.

Human Rights vs. Algorithmic Policing

Let’s not sugarcoat it—AI Crime Prediction Tools present serious risks to human rights. When you start predicting behavior, you tread dangerously close to violating people’s freedoms. Privacy, freedom of movement, the right not to be profiled—these aren’t optional extras in a democracy. They’re the foundation.

Take privacy, for example. Predictive policing requires constant surveillance, data collection, and monitoring. That means tracking people’s movements, scanning their social media activity, and analyzing their interactions. Even if someone hasn’t done anything wrong, they could be flagged as suspicious just because the algorithm says so. How’s that for Big Brother?

Then there’s the chilling effect. If people know they’re being watched and judged by an invisible system, they start to self-censor. They may avoid certain neighborhoods, social groups, or even topics of conversation. That’s not safety—it’s social control.

Let’s not forget discrimination. These tools are more likely to target marginalized communities, amplifying existing inequalities. If a community is already struggling with over-policing and underinvestment, AI-driven surveillance adds another layer of scrutiny.

International human rights groups have started raising red flags. Amnesty International and Human Rights Watch have published reports warning that AI in policing could violate basic human rights. In some places, AI Crime Prediction Tools have already led to public protests and court challenges.

So the question isn’t just “Do they work?” but also “Do they respect our rights?” If safety comes at the cost of freedom, we need to rethink what we’re willing to accept seriously.

Transparency and Accountability—Who’s Watching the Watchers?

Here’s the kicker—AI Crime Prediction Tools don’t operate in a vacuum. They are created by humans, deployed by law enforcement, and used in systems that impact real lives. But who’s holding these tools accountable? Right now, the answer is not many.

Let’s break it down. Most of these tools are built by private companies. That means the source code and algorithmic logic are often protected under intellectual property laws. It sounds fair in the business world. But this isn’t about shopping carts or weather apps—this is about people’s freedom and futures. When these tools influence who gets arrested or monitored, the public has a right to know how they work. Yet, most of the time, we’re left in the dark.

Accountability gets even murkier when things go wrong. Say an AI tool misidentifies someone, and they’re arrested, surveilled, or even harmed—what’s the recourse? Can the individual sue the developers? Can the police be held liable for relying on an inaccurate prediction? In many jurisdictions, the legal system hasn’t caught up. It’s like being hit by a driverless car, only to find out no one’s really in charge.

Another major flaw in the current system is the lack of third-party audits. Unlike financial systems or even environmental standards, AI tools in policing aren’t routinely audited by independent experts. That means any bias, coding errors, or misuse can go unchecked for years. It’s like letting a plane fly without ever checking the engines.

And here’s something to think about—if these tools are meant to serve the public, shouldn’t the public have a say? Very few communities get to vote on whether AI should be used in their police departments. Often, these tools are implemented quietly, with little to no input from the people they affect most.

Alternatives to Predictive Policing—What Else Can We Do?

Let’s take a step back and ask the big question: Do we need AI Crime Prediction Tools to fight crime? The answer might surprise you. While these tools offer data-driven insights, they are far from the only option and arguably not the best one.

One powerful alternative is community-based policing. This approach focuses on building trust between officers and the neighborhoods they serve. Instead of relying on algorithms to dictate patrols, officers engage with residents, understand local issues, and work together to prevent crime. Cities like Camden, New Jersey, have seen major drops in violent crime through this method—no predictive tech needed.

Another effective tool? Investment in social services. Many crimes stem from poverty, lack of education, mental health issues, or substance abuse. Rather than predicting where crimes will happen, why not address the root causes? Programs that provide job training, addiction counseling, or youth outreach can be far more effective in the long run than sending patrol cars to algorithmically determined “hot spots.”

There’s also the potential of using AI in more transparent and supportive ways. For example, AI can help identify officers at risk of misconduct, monitor public complaints in real time, or analyze traffic stop data for racial profiling. These are applications that empower communities rather than police them.

And let’s not forget human judgment. AI will never be able to fully replace empathy, intuition, or life experience, no matter how sophisticated it gets. Human oversight is essential in any justice system, especially one that deals with life-altering decisions.

The bottom line? We don’t have to put all our eggs in the AI basket. There are smarter, safer, and more equitable ways to create safer communities—ones that don’t rely on the crystal ball of predictive policing.

Public Perception—Fear, Trust, and the Future of Safety

Ask ten people on the street what they think about AI Crime Prediction Tools, and you’ll probably get ten different answers. That’s because public perception of this technology is all over the map—some see it as a breakthrough, others as a ticking time bomb.

On one hand, there’s fear. People worry that AI will lead to more surveillance, more false arrests, and fewer rights. This fear isn’t unfounded, especially in communities that have already suffered from over-policing. The idea of being judged by a machine is unsettling like being followed around by a robot that doesn’t blink.

On the other hand, there’s hope. Some see these tools as a way to bring consistency and objectivity into a deeply flawed system. In theory, AI can remove human bias and make decisions based on data rather than gut feeling. That’s a powerful promise, especially in systems where injustice has been baked in for decades.

But here’s the catch: trust. For any tech, especially one as sensitive as crime prediction, to be accepted, the public must trust it. That means transparency, fairness, and accountability. Without these, even the best tool will be met with suspicion and resistance.

Education plays a huge role in shaping perception. Most people don’t know how these tools work, who develops them, or how their data is used. Clear, honest communication from governments and developers can go a long way in bridging this knowledge gap.

And let’s not forget media coverage. The way news outlets report on predictive policing can either stoke fear or build understanding. Balanced reporting that highlights both successes and failures is essential.

AI Crime Prediction Tools won’t succeed—or fail—on their technology alone. Their fate depends on how well they align with the values of the communities they aim to protect.

The Global View—How Other Countries Use AI in Policing

The use of AI Crime Prediction Tools isn’t just a U.S. phenomenon—it’s global. From Europe to Asia, governments are exploring how AI can be used to fight crime, often with very different results.

In China, for instance, AI surveillance is on a whole different level. Authorities use facial recognition, big data, and predictive analytics not just to track criminals but to monitor the general population. Their “social credit” system even ties behavior to personal scores, affecting everything from job eligibility to travel rights. For many, it’s a glimpse into an Orwellian future.

Some European nations, on the other hand, have adopted a more cautious stance. The European Union has strict data protection laws under the GDPR, and many countries require impact assessments before deploying AI in public services. In the Netherlands, for example, a court recently banned a predictive tool used to assess welfare fraud because it was found to be discriminatory.

India and Brazil have both piloted versions of predictive policing, but public backlash and data privacy concerns have limited their adoption. In South Africa, similar efforts have focused more on crime mapping rather than individual prediction, identifying high-risk zones rather than flagging specific people.

The global trend shows a growing interest in AI policing, but also a wide range of legal, ethical, and cultural responses. Countries with stronger privacy protections are slower to adopt these tools, while those with authoritarian tendencies may embrace them more fully.

So, what can we learn from the global picture? AI Crime Prediction Tools are not one-size-fits-all. Their effectiveness—and acceptability—depend heavily on how they’re implemented, who controls them, and what safeguards are in place. Learning from the successes and failures of other nations is crucial if we want to avoid repeating their mistakes.

The Road Ahead—Regulations, Reforms, and Responsibilities

As AI Crime Prediction Tools become more widespread, the path forward needs more than just innovation—it needs intention. If we don’t act now to implement strong policies, we risk handing over public safety decisions to systems that few understand and even fewer can control.

First, regulation is key. Governments must develop clear, enforceable laws that outline how these tools can be used. This includes requiring transparency in how predictions are made, mandating third-party audits, and ensuring people have a right to appeal or contest decisions influenced by AI. Think of it as a “Bill of Rights” for algorithmic policing.

Next, we need reform. Law enforcement agencies must be trained not just in how to use these tools but also in understanding their limitations. AI should be a guide, not a final judge. Officers must remain accountable for their actions, regardless of what the software suggests.

And then there’s corporate responsibility. Tech companies that develop AI Crime Prediction Tools need to embrace ethical design principles from the start. That means avoiding biased data, providing explainable models, and ensuring their tools can be scrutinized by the public. Just like food labels list ingredients, AI trends should disclose how they operate and what data they use.

Public supervision is yet another essential component. Civil society organizations, journalists, and watchdog groups should be empowered to investigate and report on AI use in policing. Community input shouldn’t be an afterthought—it should be the foundation of any deployment.

Lastly, we must build a culture of accountability. Every stakeholder—from developers to decision-makers—must answer to the people whose tools they impact. Because, in the end, safety doesn’t come from software alone. It comes from trust, fairness, and a shared commitment to justice.

Conclusion

The conversation around AI Crime Prediction Tools is more than a tech debate—it’s a moral and social reckoning. On the surface, these tools promise safer streets and faster justice. But dig deeper, and you uncover layers of bias, ethical landmines, legal uncertainty, and human rights concerns.

Are they inherently evil? Not necessarily. But they’re certainly not ready to replace traditional policing or judicial oversight. Like any tool, they can be misused, especially when accountability is weak and transparency is lacking.

We must approach AI in crime prediction with caution, skepticism, and a commitment to ethical innovation. That means asking hard questions, demanding better answers, and never losing sight of the human lives behind the data points.

Because at the end of the day, public safety isn’t just about stopping crime. It’s about preserving the freedoms that make society worth protecting in the first place.